By Carol Peters December 27, 2024

Batch processing is a method of processing large volumes of data in a systematic and efficient manner. It involves grouping similar tasks together and executing them as a batch, rather than processing each task individually. This approach is commonly used in various industries, including finance, manufacturing, and telecommunications, where large amounts of data need to be processed regularly.

Batch processing offers several advantages over other processing methods, such as real-time processing. It allows organizations to process large volumes of data without overwhelming their systems, as tasks are executed in batches, reducing the strain on resources. Additionally, batch processing enables organizations to automate repetitive tasks, saving time and effort.

Understanding Batch Processing: Definition and Key Concepts

Batch processing can be defined as the execution of a series of tasks or jobs in a sequential manner, without any user interaction. These tasks are typically related and can be processed together, resulting in increased efficiency and reduced processing time. The key concept of batch processing is the grouping of similar tasks into batches, which are then processed as a unit.

In batch processing, tasks are typically scheduled to run at specific times or intervals, allowing organizations to process data during off-peak hours or when system resources are less utilized. This ensures that the processing does not impact the performance of other critical systems or applications.

Benefits and Limitations of Batch Processing

Batch processing offers several benefits to organizations. Firstly, it allows for the efficient processing of large volumes of data. By grouping similar tasks together, organizations can process data in batches, reducing the overall processing time and improving efficiency.

Secondly, it enables organizations to automate repetitive tasks. By defining a set of rules or instructions, organizations can automate the execution of tasks, eliminating the need for manual intervention. This not only saves time but also reduces the risk of human error.

However, it also has its limitations. One limitation is the delay between data input and processing. As batch processing involves processing data in batches, there is a delay between the time data is received and the time it is processed. This delay may not be suitable for applications that require real-time processing or immediate feedback.

Another limitation is the lack of flexibility. It is designed to process tasks in a sequential manner, which means that tasks cannot be executed out of order or in parallel. This can be a limitation for applications that require dynamic processing or have complex dependencies between tasks.

Common Use Cases and Best Examples of Batch Processing

Batch processing is widely used in various industries and applications. Some common use cases include:

1. Billing and invoicing: Many organizations use it to generate invoices and process billing information. By processing billing data in batches, organizations can efficiently generate invoices for large numbers of customers.

2. Data integration and ETL (Extract, Transform, Load): It is commonly used in data integration and ETL processes. Organizations can extract data from multiple sources, transform it into a common format, and load it into a target system using batch processing.

3. Report generation: It is often used to generate reports from large datasets. Organizations can schedule report generation tasks to run at specific times, ensuring that reports are available when needed.

4. Data backup and archiving: Batch processing is commonly used for data backup and archiving purposes. Organizations can schedule batch jobs to back up or archive data at regular intervals, ensuring data integrity and availability.

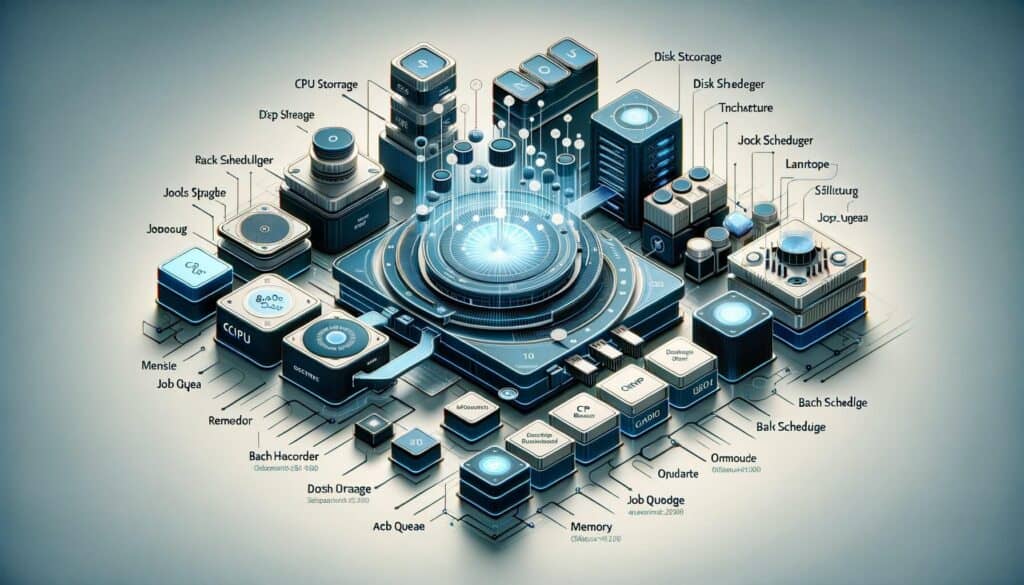

Key Components and Architecture of Batch Processing Systems

Batch processing systems typically consist of several key components and follow a specific architecture. The key components of this system include:

1. Job scheduler: The job scheduler is responsible for managing and scheduling batch jobs. It determines when and how often jobs should run, based on predefined schedules or triggers.

2. Job queue: The job queue is a storage area where batch jobs are stored before they are executed. It ensures that jobs are processed in the correct order and prevents job conflicts.

3. Job executor: The job executor is responsible for executing batch jobs. It retrieves jobs from the job queue and executes them according to predefined instructions or rules.

4. Data storage: This systems require a data storage component to store input and output data. This can be a database, file system, or any other storage medium.

The architecture of a batch processing system typically follows a client-server model. The client component is responsible for submitting batch jobs and monitoring their progress, while the server component is responsible for executing the jobs and managing system resources.

Designing and Implementing Effective Batch Processing Workflows

Designing and implementing effective batch processing workflows requires careful planning and consideration of various factors. Here are some key steps to follow:

1. Identify tasks and dependencies: Start by identifying the tasks that need to be processed and their dependencies. Determine the order in which tasks should be executed and any dependencies between them.

2. Define job parameters: Define the parameters and inputs required for each job. This includes specifying the input data, any required transformations or calculations, and the expected output.

3. Design error handling and recovery mechanisms: Plan for error handling and recovery in case of job failures or exceptions. Define how errors will be logged, how failed jobs will be retried, and how the system will recover from failures.

4. Optimize resource utilization: Consider resource utilization when designing batch processing workflows. Ensure that jobs are scheduled to run during off-peak hours or when system resources are less utilized to avoid impacting the performance of other critical systems.

5. Test and validate workflows: Before deploying batch processing workflows, thoroughly test and validate them. This includes testing different scenarios, validating input and output data, and ensuring that the workflows meet the desired requirements.

Tools and Technologies for Batch Processing

There are several tools and technologies available for this, depending on the specific requirements and use cases. Some popular tools and technologies include:

1. Apache Hadoop: Apache Hadoop is an open-source framework that provides distributed storage and processing capabilities. It is commonly used for batch processing large datasets.

2. Apache Spark: Apache Spark is another open-source framework that provides fast and general-purpose data processing capabilities. It supports batch processing, real-time processing, and machine learning.

3. Apache Airflow: Apache Airflow is an open-source platform for programmatically authoring, scheduling, and monitoring workflows. It provides a rich set of features for designing and managing batch processing workflows.

4. IBM InfoSphere DataStage: IBM InfoSphere DataStage is a data integration and ETL tool that supports it. It provides a visual interface for designing and implementing batch processing workflows.

Performance Optimization and Scalability in Batch Processing

Performance optimization and scalability are crucial considerations in batch processing systems, especially when dealing with large volumes of data. Here are some tips for optimizing performance and ensuring scalability:

1. Use parallel processing: Take advantage of parallel processing capabilities to distribute the workload across multiple resources. This can significantly improve processing speed and reduce overall processing time.

2. Optimize data storage and retrieval: Optimize the storage and retrieval of data to minimize I/O operations. This can be achieved by using efficient data structures, indexing, and caching mechanisms.

3. Monitor and tune system resources: Regularly monitor system resources, such as CPU, memory, and disk usage, to identify any bottlenecks or performance issues. Tune the system configuration and resource allocation as needed to optimize performance.

4. Implement data partitioning and sharding: Partition large datasets into smaller subsets and distribute them across multiple resources. This can improve processing speed and enable better resource utilization.

Troubleshooting and Error Handling in Batch Processing

Troubleshooting and error handling are critical aspects of batch processing systems. Here are some best practices for troubleshooting and handling errors:

1. Implement logging and monitoring: Implement a robust logging and monitoring mechanism to capture and track errors. Log relevant information, such as error messages, timestamps, and job details, to facilitate troubleshooting.

2. Define error handling strategies: Define error handling strategies for different types of errors. This includes defining how errors will be logged, how failed jobs will be retried, and how the system will recover from failures.

3. Implement job status tracking: Implement a mechanism to track the status of batch jobs. This allows for easy identification of failed or incomplete jobs and enables timely intervention.

4. Conduct regular error analysis: Regularly analyze error logs and identify recurring errors or patterns. This can help identify underlying issues and guide improvements in the batch processing system.

FAQs

Q1. What is the difference between batch processing and real-time processing?

Batch processing involves processing tasks in groups or batches, while real-time processing involves processing tasks as they occur, without any delay.

Q2. Can batch processing be used for real-time applications?

Batch processing is not suitable for real-time applications that require immediate processing or feedback. Real-time processing systems are designed to process tasks as they occur, without any delay.

Q3. What are the advantages of batch processing?

Batch processing offers several advantages, including efficient processing of large volumes of data, automation of repetitive tasks, and reduced strain on system resources.

Q4. What are the limitations of batch processing?

Batch processing has limitations, such as the delay between data input and processing, lack of flexibility in task execution, and the need for predefined schedules or triggers.

Q5. What are some common examples of batch processing?

Some common examples of batch processing include billing and invoicing, data integration and ETL processes, report generation, and data backup and archiving.

Conclusion

Batch processing is a powerful method for processing large volumes of data in a systematic and efficient manner. It offers several benefits, including efficient processing, automation of repetitive tasks, and reduced strain on system resources. However, it also has limitations, such as the delay between data input and processing and the lack of flexibility in task execution.

To design and implement effective batch processing workflows, organizations need to carefully plan and consider factors such as task dependencies, job parameters, error handling, and resource utilization. There are several tools and technologies available for this, including Apache Hadoop, Apache Spark, Apache Airflow, and IBM InfoSphere DataStage.

Performance optimization and scalability are crucial considerations in batch processing systems. Organizations can optimize performance by using parallel processing, optimizing data storage and retrieval, monitoring and tuning system resources, and implementing data partitioning and sharding. Troubleshooting and error handling are also critical aspects of these systems, and organizations should implement logging and monitoring mechanisms, define error handling strategies, implement job status tracking, and conduct regular error analysis.

Overall, it is a valuable technique for organizations that need to process large volumes of data efficiently and automate repetitive tasks. By understanding the key concepts, benefits, limitations, and best practices of batch processing, organizations can leverage this method to improve their data processing capabilities and achieve better operational efficiency.